#2823 closed defect (bug) (fixed)

Improve IP Geolocation Results

| Reported by: |

|

Owned by: |

|

|---|---|---|---|

| Milestone: | Priority: | normal | |

| Component: | Events API | Keywords: | |

| Cc: |

Description

There are a couple problems with our current data set. They're not strictly related to each other, but I'm creating a single ticket for this because I'm guessing that the solution to both will be the same: switch from our current data source to a better one.

- Problem #1: Incorrect IPv4 results. No data source will be perfect, but [several online database had correct results for instances where ours weren't https://make.wordpress.org/core/2017/04/14/nearby-wordpress-events/#comment-32448].

- Problem #2: No IPv6 support. Without this, the results will quickly lose effectiveness. As of May 2017, IPv6 adoption is at 18% globally and rising relatively fast. Some countries are as high as 32%.

It looks like our database was updated recently (March 2017), but I can't find any info on where the original data comes from. @dd32, @tellyworth, do either of you know? Do you have any thoughts on other potential sources?

Attachments (3)

Change History (57)

#2

@

@

8 years ago

Oh, and the ip2location probably comes from here: http://lite.ip2location.com/database/ip-country-region-city-latitude-longitude

May or may not be the free one. Not sure.

#3

@

@

8 years ago

- Owner set to iandunn

- Status changed from new to accepted

Ah, yeah, it looks like IP2LOCATION-LITE-DB5.csv. It could also be the commercial version, but I'll need to look closer when I have time. Thanks!

They've got IPv6 available. If we're not already using the commercial version, then it's probably worth upgrading.

#4

@

@

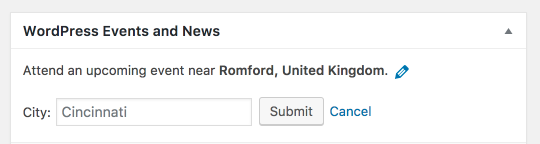

8 years ago

At the time I first encountered those years ago, they were an outdated copy of the free version. I updated the data to the latest copy, but then we didn't use it for much, so I let it go. Looking at it now, it appears to have many more rows than the free version, so I suspect somebody updated it to the non-free version. They may still have access to that and can get the big dataset, if we know who updated it last.

#5

@

@

8 years ago

@tellyworth did you update that data during development? I seem to recall you wrote some kind of importer for some data source for something remotely related to this?

#6

@

@

8 years ago

There's a bin script for updating the geonames data, but I didn't see anything for the IP geolocation.

#7

@

@

8 years ago

IP2LOCATION-LITE-DB5.csv also has the names in proper Sentence Case, rather than all uppercase, so it's definitely not that source, unless something went really wrong during the import.

Having the data improperly cased is another problem that would be solved by upgrading.

This ticket was mentioned in Slack in #core by iandunn. View the logs.

8 years ago

#9

@

@

8 years ago

Another instance of bad geolocation results: https://wordpress.slack.com/archives/C02RQBWTW/p1494634179842054

This ticket was mentioned in Slack in #core by dd32. View the logs.

8 years ago

#12

follow-up:

↓ 15

@

@

8 years ago

Howdy,

There are a few problems here:

1) The ip2location data on WP.org is very very old.

2) WP.org doesn't have a subscription/license to use the data that I am aware of.

3) I don't think there is any legitimate use case to pass an ip in the query string - this just opens up the API to abuse and most likely violates the terms/EULA of using this data.

4) The query performance is very poor and probably won't scale to the volume that we need. For ip2location specifically we have already solved this problem on WordPress.com, so I would suggest looking at the code there. I haven't looked at the geonames code much, but I suspect it suffers from similar issues.

5) We need a way to keep the information always current if we are going to use it. It changes enough on a regular basis that once it's > 60 days old it starts becoming noticeably wrong.

The first problem to solve is #2. I don't think we really need ipv6 support yet since the API should always use REMOTE_ADDR and the WordPress.org API currently doesn't have any AAAA records.

#13

@

@

8 years ago

The first problem to solve is #2. I don't think we really need ipv6 support yet since the API should always use REMOTE_ADDR and the WordPress.org API currently doesn't have any AAAA records.

The IP is not the REMOTE_ADDR in most cases - it's the WordPress user client IP, not the server IP. There's some "anonymisation" stuff in there - see maybe_anonymize_ip_address(). The exception is when the client IP provided is a private range, in which case it uses REMOTE_ADDR.

Would it be better if the client browser was making the request directly? (and not proxied through WordPress) - the detect-location call would need to be made on every request in that case, and not just on the first call / when user searches for a location change.

#14

@

@

8 years ago

I see why it was implemented this way, but I think it's a clear violation of the EULA and the endpoint will quickly be abused. I am not too worried about the traffic once #4 above is fixed, but I guess there are some general performance and privacy concerns that will need to be addressed if the implementation is changed.

#15

in reply to:

↑ 12

@

@

8 years ago

Replying to barry:

1) The ip2location data on WP.org is very very old.

2) WP.org doesn't have a subscription/license to use the data

3) I don't think there is any legitimate use case to pass an ip in the query string

4) The query performance is very poor and probably won't scale to the volume that we need

5) We need a way to keep the information always current if we are going to use it

#1, #2, and #5 are all easy to solve.

#3 (EULA)

On a technical level, it's easy to switch the client to request the data directly from api.w.org instead of proxying through their WP site.

For anyone who's concerned about privacy with that architecture, we can release a plugin that will disable the Events section of the Dashboard widget entirely. We could replace it with a simple link to the Events page on make/Community.

Does anyone think that would not be enough to mitigate privacy concerns?

#4 (performance)

I'm going to start digging into this and looking for ways to optimize. If you can say which queries you're concerned the most about, that would be helpful.

Almost all of the queries are there to support extra functionality and edge cases. Worst case scenario, the queries in the following functions can all be disabled without preventing the majority of users from successfully retrieving events: guess_location_from_ip(), guess_location_from_geonames_fallback(), get_valid_country_codes(), get_country_from_name(), and get_city_from_coordinates()

That can be done at-will on the API side without breaking the client in Core. We could even do it automatically during traffic spikes if we have some kind of signal from monitoring agents to check.

#16

@

@

8 years ago

I have been thinking about the EULA a bit, I think if we remove the actual passed IP geolocation data from the response and just return the events data it will be fine. We would then use the client IP geolocation internally, in "business logic" to figure out the closest events and return them. We still need to work on the other issues, but they are technical and thus easier to fix.

#17

@

@

8 years ago

That sounds like a good idea to me. I think the detailed steps look like this:

- The initial request. The client doesn't have a location saved at this point.

- Client requests events near

{ip address} - API returns the

eventsobject like it does today, but nolocationobject - Client parses the city name from first event (e.g. "Seattle"), and saves that as the location

- Client requests events near

- The second request. The client has a city name saved at this point.

- The client requests events near

{city name}, just like it does today when a user manually types in a location - The API matches the city name to lat/long coordinates using the Creative Commons data from geonames.org

- The API returns the

eventsobject, and alocationobject, just like it does today. - The client saves the full

locationobject as its location

- The client requests events near

- All future requests. The client has a saved

latandlongat this time- These would be exactly the same as they are today.

So, the client would have to make 2 requests instead of 1, but it would eventually get the same behavior as we have today. The client could do step #2 asynchronously after the events received in step #1 have been displayed, in order to make things a little smoother.

All of the above only applies if the user hasn't manually entered a location. If they enter a location manually, then we skip step #1, and go straight to #2.

@barry, does that sound right to you? If so, I'll get started on the necessary changes.

#18

follow-up:

↓ 19

@

@

8 years ago

To clarify, are you thinking we'd remove the IP from the client's request and just use REMOTE_ADDR on the API side, or would the client still be able to specify an arbitrary IP?

#19

in reply to:

↑ 18

@

@

8 years ago

Replying to iandunn:

To clarify, are you thinking we'd remove the IP from the client's request and just use

REMOTE_ADDRon the API side, or would the client still be able to specify an arbitrary IP?

Clients can specify an arbitrary IP. I was initially concerned about the privacy implications of the clients connecting directly to the .org API which would provide their full IP and not the truncated version that the current proxy approach uses. I thought this would be the only place that a .org site sends the client IP to WordPress.org (as opposed to the server IP which is sent in many places). However, I then remembered that we use the W.org CDN for serving emoji in core, so I am not sure if it's a huge problem.

I'm ok with any approach that satisfies the "business requirements" of this widget and doesn't violate the EULA for the data source(s) we use. The rest of the stuff like performance and scaling we can figure out pretty easily.

#20

@

@

8 years ago

Oh, and I forgot, if we send arbitrary IPs we might need IPv6 support which I haven't looked at yet. If the privacy concerns aren't an issue, having the client connect directly to the API endpoint might be the cleaner implementation.

#21

@

@

8 years ago

@dd32 has a really good idea to modify the process a bit. I think will make things simpler on the client side, while still staying within the EULA.

When the client makes a request for events and provides the IP, we geolocate that IP from ip2location, and fetch the events that are close to it. We do not return the location data provided by ip2location to the client.

At this point, the response object being built looks like this:

{

'location: {},

'events: {

{

'id' => 1234,

'date' => 194323423,

'type' => 'wordcamp',

'title' => 'WordCamp Seattle',

'location' => 'Seattle, WA, USA'

},

// more events here

}

}

The response.location is empty. The response.events[0].location field above is pulled from wporg_events, and originally comes from the WordCamp.org API.

The next step is to take that response.events[0].location field, and search our geonames table for it. geonames is Creative Commons licensed, and provides latitude and longitude.

So now, we can populate the location field in the response with the data we've pulled from geonames. Now the response object looks like this:

{

'location: {

'name' => 'Seattle',

'latitude' => '47.200324',

'longitude' => '-121.32523',

'country' => 'US',

},

'events: {

{

'id' => 1234,

'date' => 194323423,

'type' => 'wordcamp',

'title' => 'WordCamp Seattle',

'location' => 'Seattle, WA, USA'

},

// more events here

}

}

This way, we can still return a location to the client in the initial request, but we're not directly exposing the location details from ip2location at all. The previous approach was essentially the same thing, but the response.location lookup was being done in a subsequent request (see comment:17), rather than all at once.

@barry, do you see any problems with that?

#25

@

@

8 years ago

It turned out to be even simpler than comment:21, since we already have the venue's location data in the events table. r5491 fixed #3 from comment:15.

Barry updated the geolocation data from ip2location.com, which fixed #1 and #2.

That just leaves #4 (performance), which Dion is working on, and #5 (automatic updates), which I'll look into once higher priority tasks are done.

#27

@

@

8 years ago

Added the above patch to address Problem 2, IPv6 support. It will need to get updated once we know the name of the table with the IPv6 location data.

#28

@

@

8 years ago

2823.diff takes a pretty harsh attack on some of the code in order to attempt to get the performance better for location searches.

get_city_from_coordinates()is gone - It's just not performant enough and I couldn't find an easy way to achieve decent performance for such an edgecaseget_valid_country_codes()wasn't actually being used (though was querying)guess_location_from_geonames_fallback()and as a resultget_ideographic_counties()is gone - this at first seems like a step backwards, however, I think what it's attempting to achieve was going about things the wrong way.REPLACE( alternatenames, CONCAT( asciiname, ' - ' ), '' ) LIKEwasn't performant and there wasn't much of a chance of it being so- using a

alternatenames LIKE '%,Blah,%'was working, but wasn't really fast

In order to speed things up, I created a new table based off the geoname data, geoname_summary which expands the (name, asciiname, alternatenames) out into a single name column, and also adds variants (for example the city name without dashes).

One major change in this is that if you type in, for example, The Big Apple the location returned will now be The Big Apple instead of New York City, similarly, if you search for Wien you'll get just that Wien not Vienna.

Unfortunately typo's will stay as typo's (for example, New Yorke or Lunden).

This might also make it easier to return multiple results in the future (Springfield, MO vs Springfield, MA for example, or allowing an en_AU locale to pull up Wellington, NZ instead of Wellington, AU).

All the test cases that passed are still passing albeit with the new return data, but instead of the ~100 queries taking 4-6 seconds, it now takes 0.04s for a slightly fewer number of queries.

This ticket was mentioned in Slack in #core by iandunn. View the logs.

8 years ago

This ticket was mentioned in Slack in #core by iandunn. View the logs.

8 years ago

#34

follow-up:

↓ 35

@

@

8 years ago

Its worth adding the oddity I experience here:

Firstly, I am nowhere near Romford (that's a pretty far away from where I am).

Secondly, as someone from UK, showing US City in the box is a weird experience. Also, why only cities? In UK for example towns have meetups and a lot of people don't live in cities.. this I am sure happens in US :)

#35

in reply to:

↑ 34

;

follow-up:

↓ 36

@

@

8 years ago

Replying to karmatosed:

Secondly, as someone from UK, showing US City in the box is a weird experience.

That string is internationalized, so you'll see a local city as soon as it's translated.

Also, why only cities?

The database we have is primarily city-centric. It has some region information, but it's not detailed or comprehensive enough to fully support. The API does try to match inputs other than cities, but we only explicitly mention cities in the instructions because that's the only thing we can guarantee will work.

#36

in reply to:

↑ 35

;

follow-up:

↓ 41

@

@

8 years ago

Replying to iandunn:

Replying to karmatosed:

Also, why only cities?

The database we have is primarily city-centric. It has some region information, but it's not detailed or comprehensive enough to fully support. The API does try to match inputs other than cities, but we only explicitly mention cities in the instructions because that's the only thing we can guarantee will work.

Worth noting that the database we've been using says "city" but in this context, city means town. We've used a data source which includes town with a population of >1k (or an administrative area of 1k, so town X of 500 and 500 in the surrounding area, where X is the common point would be included). For countrycode = GB there were 3.6k 'cities' with 14k different spellings and alternate names.

@

@

8 years ago

Approach using Google Geocode to power user lookups instead of attempting to roll our own geocode service - Google does it better, and doesn't receive any personal user details with a server-side request

#38

follow-up:

↓ 40

@

@

8 years ago

Searching for "Köln" gives me a "We couldn’t locate Köln." error but a search for "Cologne" or "Koeln" returns the proper "Köln". "Münster" is working fine so I'm not sure if it's an issue with umlauts.

Another oddity: "Düsseldorf" returns "Düsseldorf-Pempelfort" which is kinda unexpected because "Pempelfort" is just a city part of "Düsseldorf".

#39

@

@

8 years ago

As I mentioned in the devchat yesterday, searching for "Gardner, CO" gives events in Kansas City, MO...which is almost 700 miles away. Looking into the community-events-location meta_key, I see that the lat/lng stored is for KC, MO, which explains why it showing event for KC, MO.

However, if Otto's comment is correct that place name searches are using geonames.org data then that is odd, because http://www.geonames.org/search.html?q=gardner%2C+co&country= correctly finds Gardner, CO (with the correct lat/lng) as the 1st result.

#40

in reply to:

↑ 38

;

follow-up:

↓ 43

@

@

8 years ago

Replying to ocean90:

Searching for "Köln" gives me a "We couldn’t locate Köln." error but a search for "Cologne" or "Koeln" returns the proper "Köln". "Münster" is working fine so I'm not sure if it's an issue with umlauts.

Another oddity: "Düsseldorf" returns "Düsseldorf-Pempelfort" which is kinda unexpected because "Pempelfort" is just a city part of "Düsseldorf".

I think most of the charset issues have been worked out, but there's still at least one that I know about (see the unit tests).

@dd32 is working on upgrading our geocoding from geonames.org to Google's Geocoding API, which should fix all of that. See 2823.2.diff.

Replying to pbiron:

As I mentioned in the devchat yesterday, searching for "Gardner, CO" gives events in Kansas City, MO...which is almost 700 miles away. Looking into the community-events-location meta_key, I see that the lat/lng stored is for KC, MO, which explains why it showing event for KC, MO.

I think that's an edge case just because there's not enough information to distinguish between the two Kansas Citys, since they're so close to each other geographically.

[wp40774] might be able to help there, though, since we could use the IP geolocation as a signal. We'd also like to have the API start returning a list of alternate locations, and have Core display those in a dropdown for the user to choose. That'll probably have to be 4.8.1, though.

However, if Otto's comment is correct that place name searches are using geonames.org data then that is odd, because http://www.geonames.org/search.html?q=gardner%2C+co&country= correctly finds Gardner, CO (with the correct lat/lng) as the 1st result.

Otto's definitely correct, but the code does a lot of gymnastics to try and parse the query and look it up in a lot of ways. There could be a bug in there, or just some conflicting edge cases, etc.

Dion's work to switch to Google's API should help there too.

#41

in reply to:

↑ 36

;

follow-up:

↓ 42

@

@

8 years ago

Replying to dd32:

Worth noting that the database we've been using says "city" but in this context, city means town. We've used a data source which includes town with a population of >1k (or an administrative area of 1k, so town X of 500 and 500 in the surrounding area, where X is the common point would be included).

That probably explains the behavior I'm seeing...as Gardner, CO has a population of only 70. If the surrounding farms are included, what I call "Greater Metropolitan Gardner", the pop balloons to a whopping 400. Yes, I live in the sticks :-).

#42

in reply to:

↑ 41

@

@

8 years ago

Replying to pbiron:

Replying to dd32:

Worth noting that the database we've been using says "city" but in this context, city means town. We've used a data source which includes town with a population of >1k (or an administrative area of 1k, so town X of 500 and 500 in the surrounding area, where X is the common point would be included).

That probably explains the behavior I'm seeing...as Gardner, CO has a population of only 70. If the surrounding farms are included, what I call "Greater Metropolitan Gardner", the pop balloons to a whopping 400. Yes, I live in the sticks :-).

No shame in that :)

I think the only reason we limited it to towns with > 1k population is just because, for an endpoint that will see as much traffic as this one, we don't have the server resources to do the database queries on larger data sets. Once we switch to Google's API, though, that should solve that problem.

#43

in reply to:

↑ 40

@

@

8 years ago

Replying to iandunn:

Replying to pbiron:

As I mentioned in the devchat yesterday, searching for "Gardner, CO" gives events in Kansas City, MO...which is almost 700 miles away. Looking into the community-events-location meta_key, I see that the lat/lng stored is for KC, MO, which explains why it showing event for KC, MO.

I think that's an edge case just because there's not enough information to distinguish between the two Kansas Citys, since they're so close to each other geographically.

Sorry, "KC, MO" was just my abbreviation for "Kansas City, MO"...I should have been more clear.

#51

@

@

8 years ago

r5543 merges the last bits of 2823.diff, so the performance issues should be sorted now.

The two things still left in this ticket are:

- Switching to an external geocoding API for better accuracy. I'm talk to a Google rep today about ToS, pricing, etc. If that goes well, I'll work on merging 2823.2.diff. I'd like to keep the geonames stuff around as a fallback, though, at least for a few weeks. Depending on pricing, we may also want to keep it around permenantly, and use it for queries that we are confident will return good results from the geonames.org data, and then only use the external API for requests that are likely to be get better results from it.

- Automating the ip2location updates. I think I have everything I need to finish the script now, so I'll be working on this today.

#53

@

@

6 years ago

- Resolution set to fixed

- Status changed from accepted to closed

Switching to an external geocoding API for better accuracy.

3 years later, we've still not done that. I think we can shelve that discussion for a later date.

Automating the ip2location updates. I think I have everything I need to finish the script now, so I'll be working on this today.

This still hasn't been done, but is updated often enough.

I'm going to close this ticket as fixed, we can pull it back up in the future if needed.

If you're using the data I think you're using, then it almost certainly comes from the free data set at http://www.geonames.org/